CTR Bots in SEO: How They Influence Rankings Fast

Most American agencies face rising pressure to deliver higher search rankings in competitive markets. With several search engines driving complex ranking algorithms, using CTR bots offers a powerful shortcut for influencing organic traffic signals. Experts report that up to 40 percent of modern SEO campaigns rely on automated interaction tools to simulate genuine engagement. This article reveals advanced CTR bot strategies designed to help digital marketers improve client website performance while navigating common risks and ethical considerations.

Key Takeaways

CTR Bots Explained for SEO Strategies

Click-through rate (CTR) bots represent sophisticated automated tools designed to simulate genuine user interactions with search engine results pages. These advanced systems generate targeted, organic-looking clicks that aim to strategically influence search rankings by manipulating engagement signals. Professional CTR bots leverage complex behavior simulation techniques that mimic authentic human browsing patterns, making them increasingly difficult for search engines to detect.

The fundamental mechanism behind CTR bots involves generating realistic search traffic that appears completely natural. Advanced bots accomplish this through sophisticated techniques like geo-targeted IP addresses, randomized click intervals, varied browsing behaviors, and intelligent dwell time management. Search ranking algorithms interpret these simulated interactions as genuine user engagement, potentially improving a website's perceived relevance and authority. Comprehensive CTR bot strategies can systematically enhance search visibility by creating the appearance of increased user interest and interaction.

Effective CTR bot implementations require careful configuration and strategic execution. Key parameters for successful deployment include precise geolocation matching, realistic browsing patterns, diverse traffic sources, and nuanced interaction signals that closely replicate human behavior. Marketers must balance the potential ranking benefits with the inherent risks of detection, understanding that search engines continuously evolve their algorithms to identify and penalize artificial engagement tactics.

Pro Tip: Authenticity Matters: Always configure CTR bots to mirror genuine human behavior by using diverse IP ranges, implementing realistic browsing patterns, and maintaining natural interaction intervals to minimize detection risks.

Different CTR Bots and Their Functions

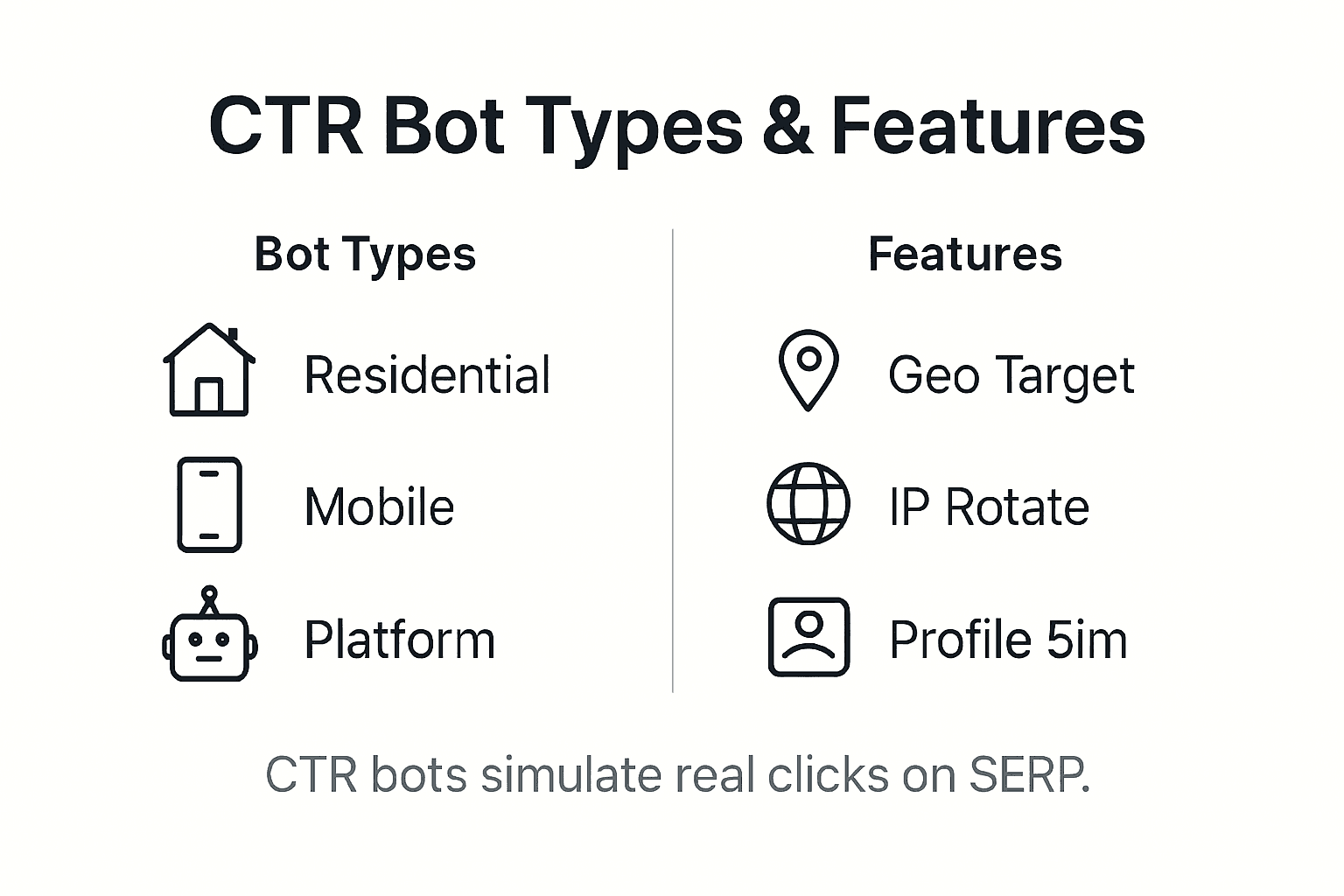

CTR bots represent a diverse ecosystem of specialized tools designed to manipulate search engine engagement metrics through sophisticated traffic simulation. Different CTR bot categories leverage unique technological approaches to generate authentic-looking search interactions, each targeting specific search engine platforms and optimization goals with nuanced strategies.

The primary categories of CTR bots include residential IP bots, mobile traffic simulators, platform-specific engagement tools, and multi-platform traffic generators. Residential IP bots utilize real residential internet addresses to create highly believable user interactions, making them particularly effective for avoiding detection. Mobile traffic simulators specialize in generating clicks from smartphone and tablet devices, mimicking genuine mobile user behavior across different geographic regions. Platform-specific tools are engineered to work precisely with Google, YouTube, or other search environments, incorporating platform-specific interaction patterns.

Advanced CTR bot solutions offer granular control through features like geolocation targeting, browser profile management, and adaptive interaction intervals. These sophisticated systems enable marketers to customize traffic generation with unprecedented precision. Key differentiators include IP rotation capabilities, session variance mechanisms, and the ability to simulate complex user journeys that appear completely authentic to search engine algorithms.

Pro Tip: Strategic Diversity: Select CTR bots that offer multiple traffic source types and configurable interaction parameters to maintain a natural, organic-looking engagement profile across different search platforms.

Here's a comparison of popular CTR bot categories and their core functionalities:

How CTR Bots Simulate User Behavior

User behavior simulation represents the cornerstone of sophisticated CTR bot technology, enabling these advanced tools to mimic genuine human interactions with search engine results. Advanced bots construct intricate behavioral scripts that replicate complex user engagement patterns, incorporating nuanced elements like keyword searches, precise link selection, and organic navigation sequences.

Behavioral session modeling involves dynamically layering interaction patterns to create authentic user journeys, which goes far beyond simple click generation. These sophisticated systems analyze and reproduce human-like interaction characteristics such as variable scroll speeds, natural pausing intervals, mouse movement trajectories, and contextually appropriate browsing times. By integrating multiple behavioral signals, CTR bots can generate traffic that appears remarkably similar to genuine user interactions, making detection increasingly challenging for search engine algorithms.

The core technological mechanisms driving user behavior simulation include sophisticated IP rotation, browser fingerprint randomization, and intelligent session management. These components work in concert to create comprehensive digital personas that exhibit consistent yet varied interaction patterns across different search contexts. Advanced CTR bots can simulate geographic diversity, device-specific behaviors, and even account for subtle variations in user engagement based on search intent, content type, and platform-specific interaction norms.

Pro Tip: Behavioral Authenticity: Configure your CTR bots to introduce controlled randomness in interaction patterns, ensuring each simulated session feels unique while maintaining an overall consistent and natural engagement profile.

Legal and Ethical Issues with CTR Bots

The deployment of CTR bots introduces complex legal and ethical challenges that extend far beyond simple technical manipulation. Search engines like Google explicitly prohibit artificial traffic generation, classifying such practices as direct violations of their webmaster guidelines that can result in severe ranking penalties or complete website deindexing. Algorithmic integrity becomes a critical concern, as these automated tools fundamentally attempt to deceive search ranking systems designed to reward genuine user engagement.

Ethical considerations in technological automation demand transparent, value-driven practices that prioritize consumer trust and technological authenticity. The fundamental moral dilemma emerges from the intentional misrepresentation of user interactions, which undermines the core principle of search engines as platforms delivering relevant, organically-determined content. While CTR bots might provide short-term ranking improvements, they simultaneously erode the long-term credibility of digital marketing strategies and potentially expose businesses to significant reputational risks.

The legal landscape surrounding CTR bots remains complex and evolving, with potential implications ranging from search engine platform policy violations to potential fraud allegations. Digital marketing professionals must carefully evaluate the potential consequences, which could include permanent search engine blacklisting, loss of advertising privileges, and potential legal challenges from platforms seeking to maintain algorithmic fairness. Advanced search algorithms continuously develop more sophisticated detection mechanisms, making CTR bot usage increasingly risky and unsustainable as a long-term optimization strategy.

Pro Tip: Ethical Navigation: Always prioritize genuine content improvement and authentic user engagement strategies over artificial traffic manipulation, focusing on creating high-quality, user-centered content that naturally attracts and retains audience interest.

Comparing CTR Bots to Organic Alternatives

Search engine optimization demands strategic decision making, particularly when comparing automated CTR manipulation techniques against genuine organic growth strategies. Comprehensive SEO approaches reveal significant distinctions between artificial traffic generation and sustainable ranking improvements, highlighting the critical differences in long-term website performance and search engine credibility.

Organic alternatives to CTR bots focus on creating high-quality, user-centered content that naturally attracts audience engagement. These strategies include comprehensive keyword research, technical website optimization, producing authoritative content, building genuine backlinks, and improving user experience metrics. Unlike CTR bots, which provide temporary ranking boosts, organic methods develop consistent search visibility through authentic signals that search engines recognize and reward. Organic optimization techniques create a robust digital foundation that compounds value over time, generating sustainable traffic growth without the inherent risks associated with artificial manipulation.

The comparative landscape between CTR bots and organic strategies reveals stark differences in risk profiles and long-term outcomes. While CTR bots might offer quick ranking improvements, they expose websites to potential algorithmic penalties, reputation damage, and potential search engine sanctions. Organic alternatives, conversely, build digital credibility through consistent, transparent practices that align with search engine guidelines. Professional marketers increasingly recognize that investing in genuine content quality, technical performance, and user experience provides more stable and predictable ranking improvements compared to the high-risk, short-term gains promised by automated traffic generation tools.

Pro Tip: Strategic Investment: Allocate resources toward creating exceptional content, improving website technical performance, and understanding user intent, which provide more reliable and sustainable SEO growth compared to artificial traffic manipulation techniques.

Common Pitfalls Using CTR Bots in Campaigns

CTR bot campaigns introduce complex technical and strategic challenges that can significantly undermine digital marketing efforts if not executed with precision and careful planning. Digital marketers frequently encounter critical implementation risks that can transform potentially beneficial optimization tools into dangerous liability generators for their websites and client portfolios.

One of the most prevalent pitfalls involves insufficient behavioral randomization, which makes automated traffic patterns easily detectable by sophisticated search engine algorithms. Ineffective CTR bots often generate predictable interaction sequences that lack the nuanced variability of genuine human browsing. These mechanistic patterns create recognizable signatures that trigger algorithmic scrutiny, potentially resulting in ranking penalties or complete website deindexation. Marketers must implement advanced traffic simulation techniques that incorporate genuine randomness, realistic geographic diversity, and contextually appropriate interaction intervals.

Additional significant risks emerge from inadequate proxy and IP management strategies. Websites can rapidly become compromised when CTR bot campaigns utilize low-quality, shared, or repeatedly flagged IP addresses, which search engines can quickly identify and neutralize. Successful implementations require dynamic IP rotation, sophisticated geolocation matching, and comprehensive traffic source diversification. Professional marketers must also maintain meticulous records of traffic generation parameters, understanding that search engines continuously evolve detection mechanisms designed to identify and neutralize artificial engagement attempts.

Pro Tip: Detection Prevention: Implement multi-layered traffic simulation strategies that incorporate genuine behavioral randomness, diverse high-quality IP sources, and contextually intelligent interaction patterns to minimize algorithmic detection risks.

The table below summarizes major risks and strategies when using CTR bots in SEO campaigns:

Elevate Your SEO Rankings with Authentic CTR Boosts

The article highlights the challenges of using generic CTR bots that risk detection due to predictable patterns and low-quality traffic sources. If you are aiming to improve your website's click-through rate, dwell time, and bounce rate with real, geo-targeted user behavior that search engines recognize as organic, then you understand how critical authenticity is. Avoid the pitfalls of artificial engagement by leveraging advanced behavioral simulation and diverse IP pools to climb Google rankings faster without compromising your site's credibility.

Experience the power of ClickSEO.io, a digital marketing platform dedicated to delivering genuine, customizable CTR bot campaigns tailored to your SEO goals. With access to over 87 million authentic IPs from 170+ countries and fine-tuned parameters such as daily clicks, session length, and geolocation targeting you can safely boost your site’s search visibility. Don’t risk penalties with low-quality traffic Choose ClickSEO.io to get measurable results and rapid ranking improvements from the 44th to the first page. Start transforming your SEO strategy today by visiting https://clickseo.io and harness the benefits of positive ranking signal manipulation with confidence.

Frequently Asked Questions

What are CTR bots and how do they influence SEO rankings?

CTR bots are automated tools that simulate genuine user interactions with search engine results. They generate organic-looking clicks to influence engagement signals, which can improve a website's perceived relevance and authority in search rankings.

How do CTR bots simulate realistic user behavior?

CTR bots use techniques like geo-targeting, randomized click intervals, varied browsing patterns, and intelligent dwell time management to mimic authentic human interactions, making it challenging for search engines to detect them.

Are there legal or ethical issues with using CTR bots for SEO?

Yes, using CTR bots can violate search engine guidelines and result in serious penalties, including ranking drops or deindexing. Ethical concerns also arise from intentionally misrepresenting user interactions, which undermines trust in digital marketing practices.

What are the risks associated with implementing CTR bot campaigns?

Risks of using CTR bots include detection by search engines due to predictable traffic patterns, low-quality proxy management leading to website blocking, and insufficient geographic diversity. Marketers must ensure advanced randomization and quality control to minimize these risks.

Recommended

- ClickSEO | Pricing

- ClickSEO | The CTR Bot That Pushes You Higher on Google with Real Clicks

- GMB CTR Booster – Google Maps Ranking Bot for Local SEO

- Meet ClickSEO - The Best CTR Booster Alternative